Hi everyone, 5 months since the Hackathon, here I present you a milestone of TiFlink.

TiFlink is a project intended to provide better stream processing integration between Flink and TiKV. It provides some features that we think will be useful for users:

- Ship with a pure-java implementation of CDC client, which frees the users from running and maintaining TiCDC and MQs like Kafka.

- Stronger consistency guarantee. Working with a Snapshot Coordinator, the distributed Flink task now can provide transactional reading and writing.

- Unified Stream and Batch processing, which is especially suitable for materialized views. The Flink job can first fully scan a snapshot of source tables, then seamlessly switch to stream processing(incremental view maintainence).

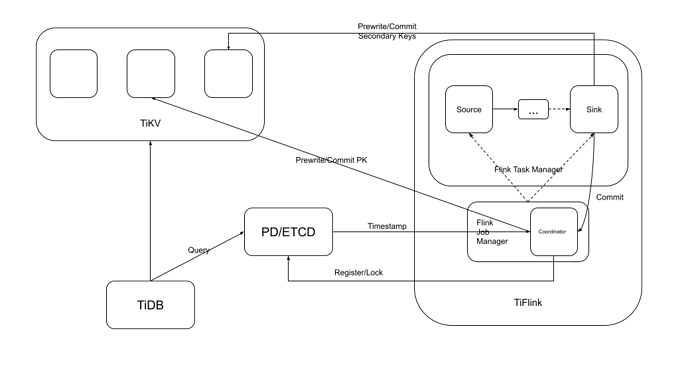

Below is an overview of the whole system design.

The key idea here is to run a GRPC service as coordinator, for each sub task of the Flink job to coordinate transactions. Within each transaction, all workers will have the same view about the start time, commit time and primary key, thus, those workers can cooperate to read and commit changes to TiKV with MVCC and the percolator model.

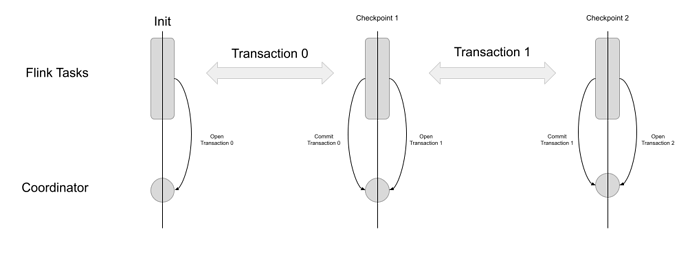

The transactions are bound to checkpoints, which means at each checkpoint, the old transaction will be committed and a new one will be opened. Which gurantees ACID and avoids many anomalies like partial reading. For a better understanding of stream processing system’s consistency, please refer to this article.

Below is a picture depicts the transactions/checkpoints management:

The lastest progress of this project is:

- The CDC client implementation become stable at TiFlink/client-java. (Ready for MR?)

- The Flink library works fine now with tables of integer primary key.

Limitations:

- Only support integer PK (for now)

- Can only recover from checkpoint within a limited time (before change log GC at TiKV’s side)

- Can’t start working with non-empty target table

- Initial scan of source tables can’t take too long (the same change log GC problem).

TODO:

- Merge CDC client to client-java

- Adjust interface of the Flink library and make it ready for beta testing

- Support general and compound primary key

- Externalize row serializer and deserializer, make them customizable

- Integrate with TiDB provide better materialize view implement

- Improve flexibility