This is a clickbait. I named it for fun but did have something to talk about TiDB.

The following 15 LOC(which includes well formatted C code and space lines) beats TiDB a lot on performance:

package expression

// #cgo CFLAGS: -O3 -mavx2

// void PlusSIMD(long long *lhs, long long *rhs, long long *res, int n)

// {

// for (int i = 0; i < n; i++)

// {

// res[i] = lhs[i] + rhs[i];

// }

// }

import "C"

func PlusSIMD(lhs, rhs, res []int64, n int) {

C.PlusSIMD((*C.longlong)(&lhs[0]), (*C.longlong)(&rhs[0]), (*C.longlong)(&res[0]), C.int(n))

}

And here gives the benchmark, Native indicates the current implementation of PlusInt arithmetic expression, SIMD indicates the version I implement with cgo:

goos: linux

goarch: amd64

pkg: github.com/pingcap/tidb/expression

cpu: AMD Ryzen 9 5900X 12-Core Processor

BenchmarkVectorizedPlus/Native-1024-20 2546257 493.3 ns/op 0 B/op 0 allocs/op

BenchmarkVectorizedPlus/SIMD-1024-20 6175520 194.2 ns/op 0 B/op 0 allocs/op

PASS

Even if there is overhead of cgo, my 15-LOC version is still 2.5x as fast as native version.

What makes them so different?

The Story

It’s a long story.

Recently, people around are talking about Golang compiler and runtime. Most of topics are saying that “Golang is suck”, but none of us actually knows how suck Golang really is until we get fucked by it.

In purpose of making people who can write code(what ever C++ or Python) be aware of the power of Golang(both compiler and runtime) objectively and fairly, I intend to do a series of experiment to prove something.

The first experiment is about Vectorization.

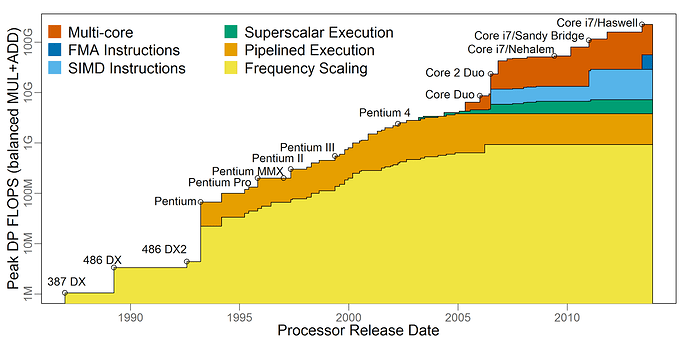

As we know, performance of a compute-intensive system(e.g. OLAP part of Database) is strongly dependent on whether it can exhaust CPU resource or not. Here I found a diagram that shows how different features improve performance of CPU:

According to the diagram we can find that the growth of CPU frequency almost stopped after 2005. At the same time, multi-core and SIMD started to make significant improvement to CPU performance. The difference is, multi-core can let instructions run in parallel while SIMD can reduce instructions by putting multiple variables into one register and compute them with a single instruction.

To take advantage of SIMD, there are several ways:

-

Use intrinsics function(e.g.

_mm256_load_si256) to write vectorized code explicitly -

Let compiler do auto-vectorization optimization for you

-

Claim that your code is vectorized

For the first way, there are only limited compilers have supported intrinsics functions, most of whom are C, C++ compilers(e.g. GCC, Clang, MSVC, ICC). TiDB is written in Golang so developers can not use intrinsics functions. But it doesn’t matter, people won’t do vectorization in this way unless they are pro.

The second way is the most common way. As far as I know, almost all modern compilers have auto-vectorization optimization, that is, if you write a clear loop, for example in C:

int sum;

int vec[n];

for (int i = 0; i < n; i++)

{

sum += vec[i];

}

Compilers can always optimize the code with SIMD instructions.

Wait, I don’t actually know if Golang compiler can do this for us… I believe it has the ability, but we still need to do some verification.

The simplest way is to write a simple loop and check the assembly:

package main

func Sum(a []int) int {

var sum int

for i := 0; i < len(a); i++ {

sum += a[i]

}

return sum

}

func main() {}

Here’s the godbolt link: https://godbolt.org/z/W1znE6TPf

We can see that the assembly doesn’t use any of the SIMD registers, which means the Golang code is not vectorized.

Take a look at assembly of GCC, and you will find the difference: https://godbolt.org/z/cqGfM43eb

Oops, seems Golang compiler doesn’t support auto-vectorization? Unbelievable!

I started searching for the documentations about SIMD support of Golang compiler, and finally find something interesting: A wiki about AVX512 https://github.com/golang/go/wiki/AVX512

While this wiki only introduce the AVX512 instruction set support in Go, not including usage or else. It makes sense since this wiki is written by a Intel guy but not Golang team. I’ll provide the tracking mail and GitHub issue of the context here: https://groups.google.com/g/golang-dev/c/DdQX1mN1Rd4 https://github.com/golang/go/issues/22779

In addition, I’ve tried to search with many different keywords but can not find any official documentation about SIMD or Vectorization.

So I have to accept the reality that Golang cannot do vectorization natively at all. (TAT)

Without SIMD enhancement, programs written in Golang may lose much of performance on modern CPU platforms.

I’d like to find out how much performance we lose from Golang compiler.

Thanks god we have cgo, which is a FFI to call C or C++ code in Golang programs.

To compare the performance of native Golang and C++, I designed an experiment, which was use C to implement a TiDB builtin function powered by GCC auto-vectorization and benchmark it with the native implementation.

Finally, as expected, cgo wins a lot. The result has been pasted at the beginning of this article.

Well, although I was a big fan of Golang, but this time I have to say: Golang is suck, indeed.

Epilogue

But is this the end?

Can we say that “Golang is suck so we should rewrite TiDB with other languages”?

No, not really.

The real world is cruel.

If someone is familiar with implementation of TiDB arithmetic expression, he will point out that my implementation is too naive since the real code is:

func (b *builtinArithmeticPlusIntSig) plusSS(result *chunk.Column, lhi64s, rhi64s, resulti64s []int64) error {

for i := 0; i < len(lhi64s); i++ {

if result.IsNull(i) {

continue

}

lh, rh := lhi64s[i], rhi64s[i]

if (lh > 0 && rh > math.MaxInt64-lh) || (lh < 0 && rh < math.MinInt64-lh) {

return types.ErrOverflow.GenWithStackByArgs("BIGINT", fmt.Sprintf("(%s + %s)", b.args[0].String(), b.args[1].String()))

}

resulti64s[i] = lh + rh

}

return nil

}

Except computing the result, there are also NULL check and overflow handling, which will take 80% of the total execution time(test on my PC).

With the reality, my 15 LOC seems very trivial. What’s worse is that cgo invocation cannot be inlined, which will turn my optimization into regression.

goos: linux

goarch: amd64

pkg: github.com/pingcap/tidb/expression

cpu: AMD Ryzen 9 5900X 12-Core Processor

BenchmarkVectorizedPlus/BenchmarkVectorizedPlus-1024-24 1364712 888.7 ns/op 0 B/op 0 allocs/op

BenchmarkVectorizedPlus/BenchmarkVectorizedPlusSIMD-1024-24 1000000 1067 ns/op 0 B/op 0 allocs/op

PASS

But in fact, the NULL check can be ommitted if the arguments are NOT NULL. We should have specialized a function to handle this, which can prevent unnecessary instructions.

Furthermore, Plus(vector, constant), Plus(constant, constant) and Plus(constant, vector) can have their own specializations. And these specializations will improve performance of Plus function in different situations.

TiDB is not good enough to take advantage of high level functionality(e.g. compiler features), we can make effect by thinking a little more about our code.

And I believe that Golang compiler will become a real modern compiler as soon as TiDB grow up.